Description

Over the past four years, I have engaged consistently with General Data Protection Regulation (GDPR) training and good practice, especially as my roles have required handling student and staff data in digital learning environments.

I have completed a range of GDPR and data protection training courses:

- GDPR: Beginner (12 Oct 2021, Kaspersky)

- GDPR: Elementary (20 Dec 2021, Kaspersky)

- General Data Protection Regulation (GDPR) (2 May 2023, Learnlight)

- An Introduction to the General Data Protection Regulation (GDPR) (15 March 2024, Kevin Mitnick Security Awareness Training)

- Data Protection Awareness course (1 August 2024, Imperial College London)

In my work creating digital learning resources, I have implemented GDPR-aligned practices, including:

- Using anonymised screenshots or dummy data when producing guides or training materials

- Ensuring personal data, such as student names or email addresses, is not visible or shared, particularly when supporting diverse, mixed cohorts

- Being careful to avoid including personally identifiable information when demonstrating tools, particularly video or screen recordings

In addition to removing personal data, I ensure that, where possible, screenshots and recordings meet accessibility expectations — such as clear contrast and readable text — in line with WCAG 2.1.

Reflection

As with accessibility standards explored in Section 3a, I see data protection not just as a compliance requirement, but as a core element of ethical, inclusive learning design. GDPR training has deepened my understanding of how easily personal data can be exposed, particularly in visual media like screenshots or screen recordings. This awareness has shaped how I plan and deliver digital learning resources, ensuring they are lawful, transparent, and privacy-conscious.

My approach reflects Article 5(1)(c) of the GDPR, which outlines the principle of data minimisation:

Personal data shall be adequate, relevant and limited to what is necessary in relation to the purposes for which they are processed (data minimisation).

This principle is reinforced by Imperial College London's guidance, which defines anonymisation as the removal of all identifiers such that data can no longer be attributed to an individual. It also highlights that pseudonymised data (such as coded identifiers) may still be considered personal data under the law.

As a result, I have embedded data protection principles into my daily workflow. I default to using anonymised or dummy data — particularly under tight deadlines — and check file names and visible content before sharing learning materials. I maintain a reference list of tools like Mockaroo and Faker for generating safe-to-use content. When using live systems for demonstrations, I set up test accounts to ensure no personally identifiable information is shown.

These principles also guide my communication practices. For example, I take care when emailing student groups to avoid disclosing personal email addresses without consent.

Over time, I have developed a proactive and pragmatic approach to safeguarding data in digital education. I routinely anonymise content, review materials for privacy risks, delete any student data that is no longer required, and ensure that my communications reflect data protection principles and are in line with data retention policies. I have also shared these practices through informal guidance and staff training.

Rather than seeing GDPR as a constraint, I view it as an essential professional standard that supports ethical, inclusive, and legally compliant digital learning environments. My approach reflects both institutional policies and broader sector expectations around the responsible use of learner data, such as those outlined by the Information Commissioner's Office (ICO) .

Summary

This section demonstrates how I have:

- Completed regular GDPR and data protection training to stay up to date with institutional and legal expectations

- Embedded data protection principles into everyday workflows for creating and sharing digital learning resources

- Used anonymised data, dummy accounts, and test environments to avoid exposing personal or sensitive information

- Reviewed filenames and visual content to ensure screenshots, recordings, and other resources are free of personal data

- Adopted cautious communication practices to prevent the accidental sharing of student data

- Provided informal guidance and support to colleagues on privacy-conscious content creation and safe data handling

- Framed GDPR not as a constraint but as a professional standard supporting ethical and inclusive learning

Evidence

Practical Application: Anonymisation & GDPR-by-Design

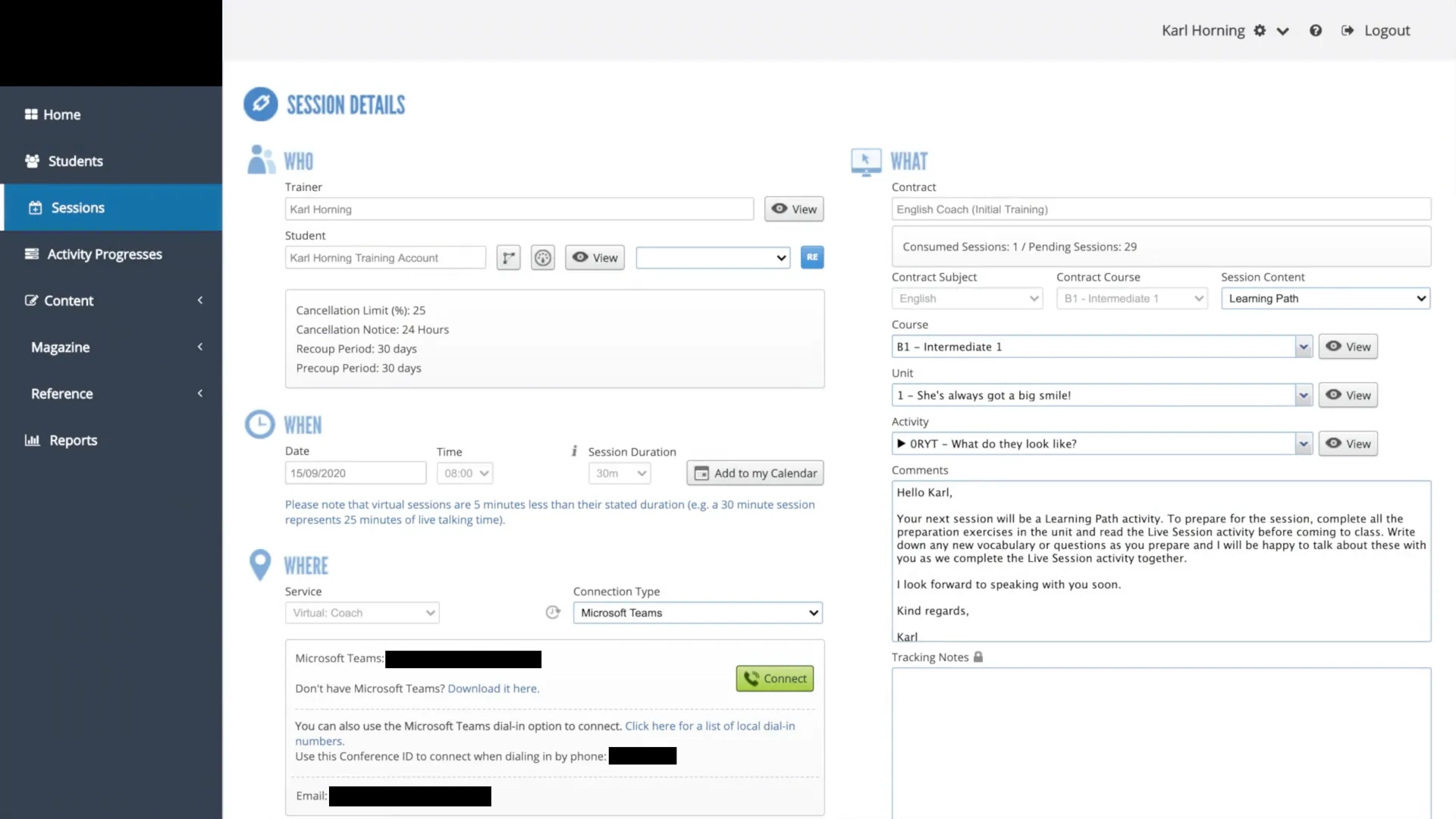

- Anonymised data in video tutorial (Joining a Microsoft Teams meeting from the Session Details Page, 2020) (Screenshot) Shows data minimisation in visual media; demonstrates safe screen-recording practice with no personally identifiable information (PII) exposed.

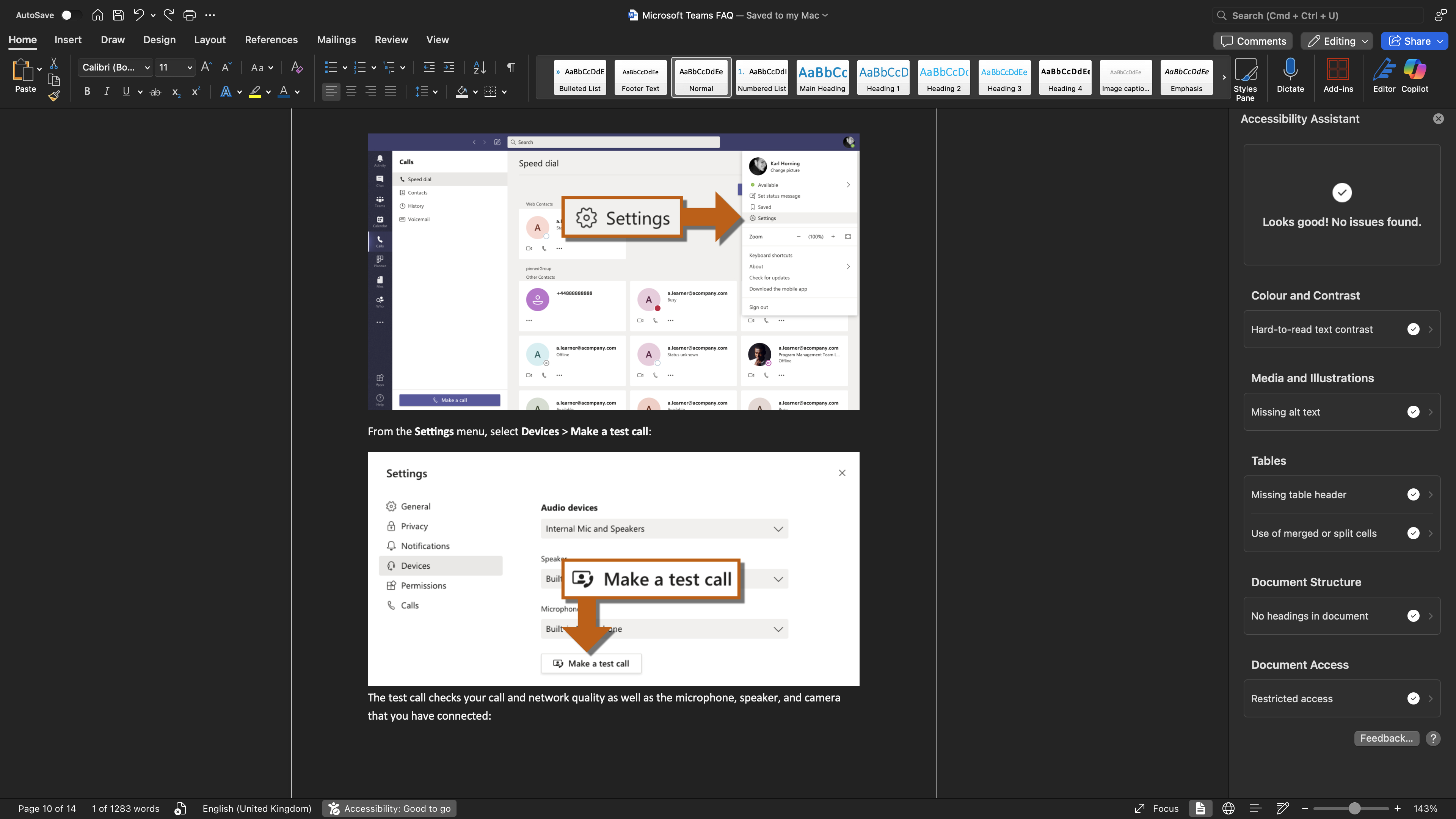

- Anonymised student-facing guide (Microsoft Teams FAQ, 2020) (Screenshot) Example of anonymised artefacts used in learner materials; aligns with institutional guidance on anonymisation and lawful processing.

Training & Institutional Compliance

- Data Protection Awareness course (Imperial College London, 2024) (Screenshot) Institution-specific training evidencing alignment with Imperial policies and procedures for lawful, ethical handling of data.

- An Introduction to the General Data Protection Regulation (GDPR) (Kevin Mitnick Security Awareness Training, 2024) (Certificate) Confirms current understanding of GDPR principles and risk-aware behaviours in digital learning contexts.

- General Data Protection Regulation (GDPR) (Learnlight, 2023) (Certificate) Ongoing professional development linking GDPR requirements to educational platforms and workflows.

- GDPR: Elementary (Kaspersky, 2021) (Certificate) Consolidates core concepts beyond foundational level; supports ethical handling of personal data across teams.

- GDPR: Beginner (Kaspersky, 2021) (Certificate) Baseline GDPR training establishing fundamental principles for subsequent practice and certification.

Further Reading

- Imperial College London Data protection guidance. Available at: https://www.imperial.ac.uk/admin-services/secretariat/policies-and-guidance/guidance/

- Information Commissioner's Office The UK GDPR. Available at: https://ico.org.uk/for-organisations/data-protection-and-the-eu/data-protection-and-the-eu-in-detail/the-uk-gdpr/

- Information Commissioner's Office UK GDPR guidance and resources. Available at: https://ico.org.uk/for-organisations/uk-gdpr-guidance-and-resources/