1b: Technical Knowledge and Ability in the Use of Learning Technology

Last Updated: 1 min read

CMALT Guidance

You should show that you have used a range of learning technologies. These might include web pages and online resources, Virtual Learning Environments, electronic assessment, blogs, wikis, mobile technology, AR/VR, programming languages, or other relevant technologies. It is important to make it clear how the technology you discuss is being used for learning and/or teaching.

Evidence might include copies of certificates (originals not needed) from relevant training courses, screenshots of your work, a note from academic or support staff who have worked with you or, if appropriate, confirmation that the work is your own from your line manager.

Description

In my career as both a developer and a learning technologist, I have worked with a wide range of learning technologies, including VLE platforms, accessibility tools, and assessment environments. I have contributed to the evaluation and optimisation of Learning Management Systems (LMS) such as Blackboard Ultra, Canvas, Brightspace, and Moodle by customising workflows, testing features, and supporting academic staff in their use.

I've also developed bespoke tools using JavaScript, Python, and Excel — from building GraphQL APIs within a commercial LMS to creating desktop applications with Electron for staff onboarding and training. These tools have improved platform efficiency, enhanced staff engagement, and often reduced technical barriers to adoption.

Key tools and technologies I've used in learning contexts include:

- VLEs: Blackboard Ultra, Brightspace, Canvas, Moodle

- Assessment & automation: Excel VBA, Python (Pandas), Postman (API testing), Mocha (backend test automation)

- User-focused development: Electron apps, API integrations, Postman-based testing workflows

- Accessibility: PDF/Word remediation, subtitle workflows, WCAG 2.1 and EN 301 549 audits

- Collaboration platforms: Confluence, Jira, GitHub, Agile boards for LMS implementation and evaluation

Reflection

Much of my work has bridged technical development and practical pedagogical application. At Learnlight, for example, I optimised GraphQL API responses and implemented automated backend testing to support a commercial LMS serving over 700,000 learners. While highly technical, this work had a direct educational impact: improved system performance translated to better student experiences.

One project that exemplifies this balance was my integration of the Oxford Learner's Dictionaries API Entry Fetcher into the Learnlight platform. I developed a Node.js proof of concept that fetched and reformatted dictionary entries into accessible, semantically structured HTML. Using Cheerio for parsing, I removed superfluous elements and applied lightweight styling to match our platform's design. This gave students reliable, accessible definitions without interrupting the flow of lessons.

All features I developed were designed to work within the Learnlight App, which was the primary interface used by students to access their online lessons. The platform was built for blended delivery, combining self-paced digital content with synchronous teaching, so mobile performance, accessibility, and seamless user experience were critical.

At Imperial College London, I brought this technical foundation into a more learner-facing role. I wrote API scripts based on LMS documentation (including Swagger specifications) and used Postman to evaluate endpoints related to identity and access management (IAM), data analytics, and system configuration. These scripts supported LMS evaluation by revealing system behaviours and limitations across platforms.

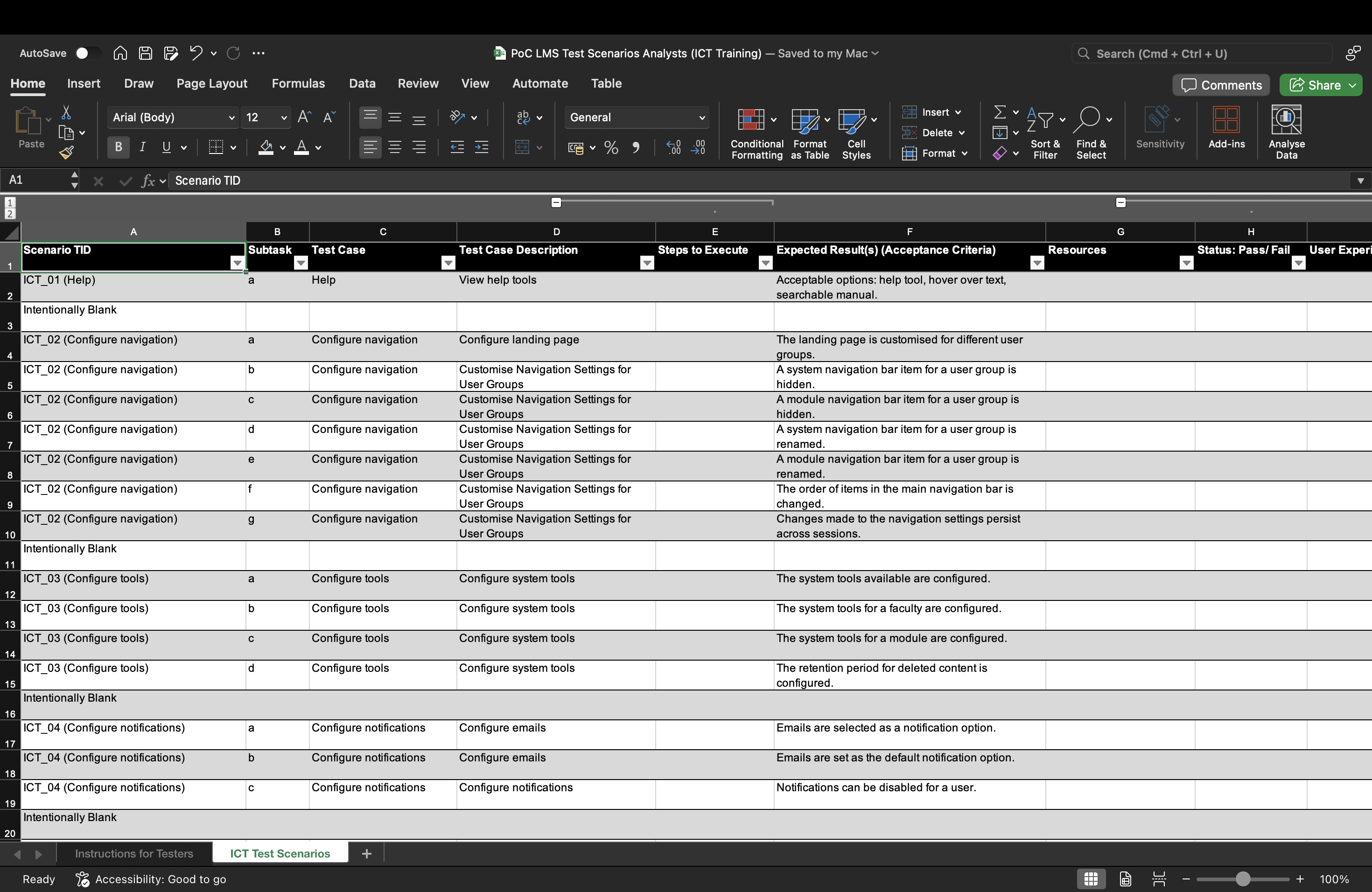

Alongside this, I developed manual test scripts aligned with real-world teaching needs, including navigation structures, module aesthetics, bulk uploads, and user activity logs. These enabled broader staff participation in the evaluation process and informed my training delivery. For example, the ICT Senior Analyst test script exemplifies how I worked closely with academic and technical colleagues to understand their specific needs. By collecting user stories from IAM administrators and ICT Senior Analysts, I identified key API functionalities for their workflows, such as documentation accessibility, data request formats, and response limitations. This collaborative approach ensured that the testing scripts were directly relevant to real-world tasks. This dual approach allowed me to explain platform capabilities and constraints from both user and developer perspectives.

Through these roles, I've gained a deep appreciation for scalable solutions, accessible design, and the practical constraints institutions face when adopting or changing systems. My work consistently aims to reduce technical friction, build user confidence, and support learning outcomes through thoughtful, hands-on use of technology.

Summary

This section shows how I:

- Used a wide range of learning technologies, including VLE platforms (Blackboard Ultra, Brightspace, Canvas, Moodle), assessment tools, and accessibility software

- Designed and developed bespoke tools using JavaScript, Python, GraphQL, and Electron to enhance learning delivery and staff training

- Created and tested APIs, including GraphQL and RESTful services, to integrate third-party resources such as the Oxford Learner's Dictionaries API into learning platforms

- Supported system evaluation processes through API scripting, automated testing (Mocha, Postman), and manual test plans aligned with teaching priorities

- Applied accessibility and inclusive design principles (for example, WCAG 2.1, EN 301 549) to ensure learning tools and resources were usable by all

- Developed features for a mobile-first blended learning platform (Learnlight App), where learners accessed self-paced and live lessons on the go

- Collaborated with academic and technical colleagues using Agile workflows, Jira boards, Confluence, and GitHub to evaluate and implement LMS solutions

- Demonstrated how technical developments directly supported learning and teaching by improving usability, access, and staff engagement

Evidence

- Postman collection developed from Blackboard Ultra's Swagger spec (Repository) Demonstrates use of API endpoint analysis to understand LMS functionality. Highlights the ability to translate technical documentation (Swagger) into practical testing workflows relevant to access management and system evaluation.

- Oxford Learner's Dictionaries API Entry Fetcher (Repository) A working Node.js prototype that fetches and transforms dictionary data for seamless integration into a blended learning platform. Demonstrates use of promises, Cheerio, and semantic HTML to improve mobile learning accessibility and user experience.

- ICT Analyst test script (Screenshot) Illustrates practical application of test plans designed to reflect academic workflows. Shows how technical tools were evaluated from a learning and teaching perspective, improving stakeholder confidence in platform usability.

- ICT Senior Analyst test script (Screenshot) Further evidence of applied testing aligned with curriculum delivery and administrative requirements. Supports dual perspective (technical and pedagogical) in LMS evaluation and training readiness.

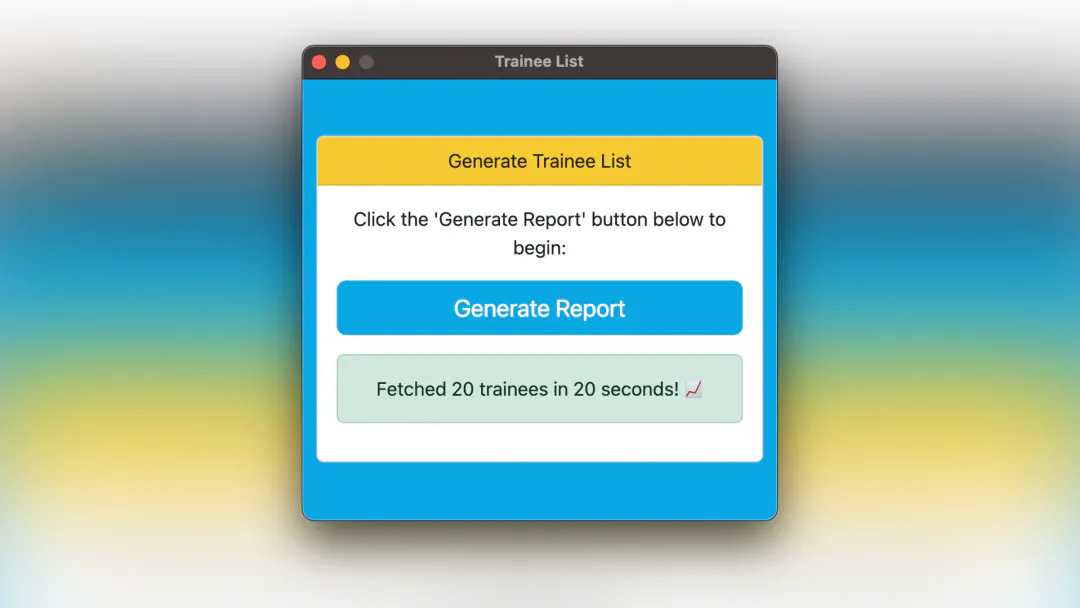

- Trainee List Electron app (Screenshot) Shows a bespoke desktop app built with Electron and JavaScript to streamline staff onboarding. Developed under institutional constraints, this demonstrates platform-specific deployment, reduced friction, and enhanced user autonomy.

- Modern GraphQL with Node (Udemy, 2023) (Certificate) Certificate demonstrating formal training in modern API development. Underpins evidence of GraphQL integration work and highlights continued professional development in technical skillsets supporting learning technologies.

Further Reading

- Cheerio Cheerio: Fast, Flexible, and Lean Implementation of Core jQuery. npm. Available at: https://www.npmjs.com/package/cheerio

- Electron Build Cross-Platform Desktop Apps with JavaScript, HTML, and CSS. Available at: https://www.electronjs.org/

- GraphQL Foundation GraphQL: A Query Language for Your API. Available at: https://graphql.org/

- Mocha Mocha: Simple, Flexible, Fun JavaScript Test Framework for Node.js & the Browser. Available at: https://mochajs.org/

- Oxford University Press Oxford Learner's Dictionaries API. Available at: https://languages.oup.com/oxford-learners-dictionaries-api/

- Postman Postman API Platform. Available at: https://www.postman.com/